Quote from the Economist: “Techno-babble: Since the launch in November of ChatGPT, an artificially intelligent conversationalist, AI is seemingly all anyone can talk about. Corporate bosses too, cannot shut up about it. So far in the latest quarterly results season, executives at a record 110 companies in the S&P 500 index have brought up AI in their earnings calls”.

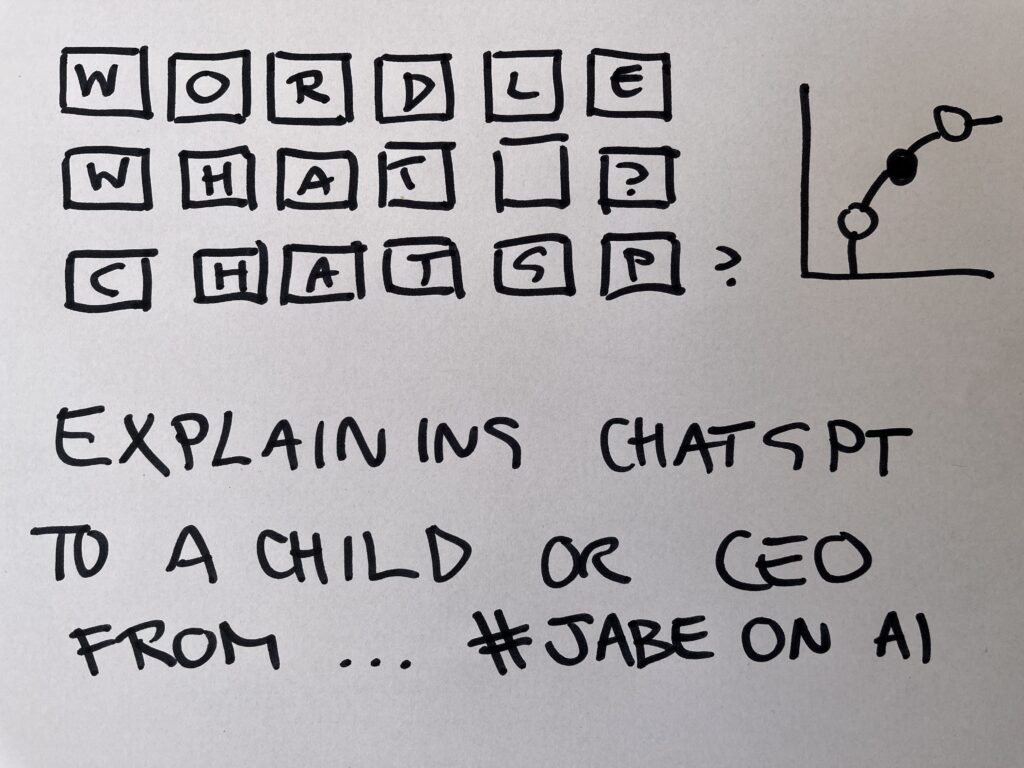

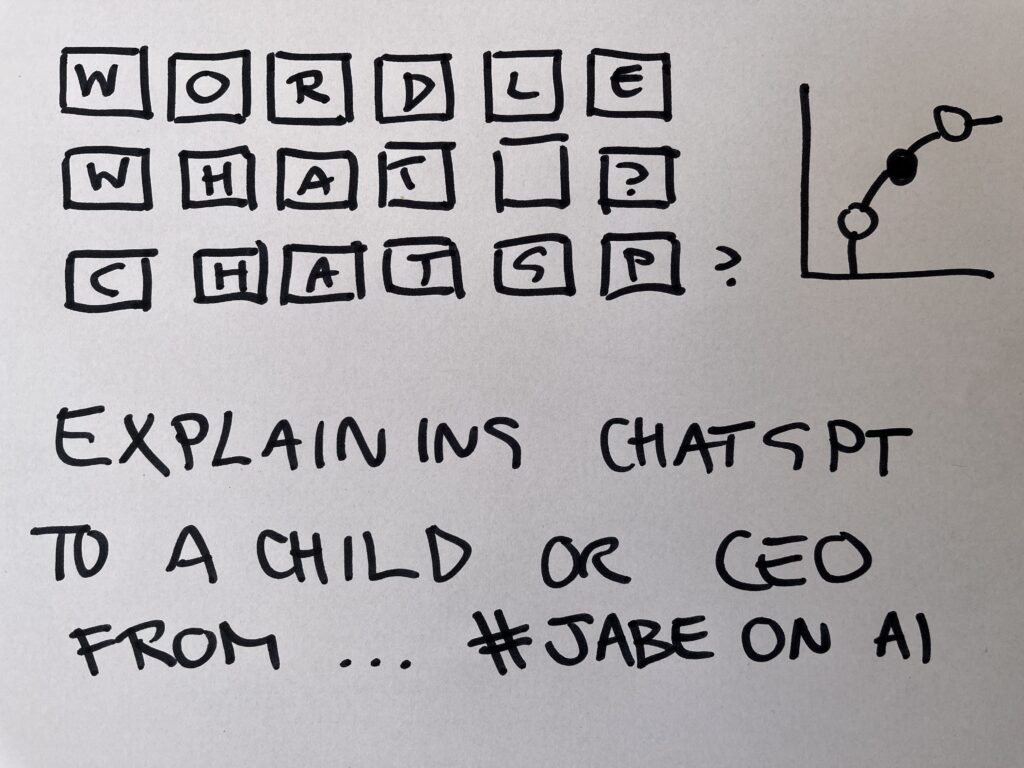

So, my mission over the next few weeks is to craft a description that 8 year-old school children and CEOs can readily understand. I was trying out some descriptions at the dinner table with my youngest daughter and my partner, and. after I attempted a description of what ChatGPT actually is … my Daughter Izzy said, so that’s like Wordle right, but for the whole internet?

I was like … yeah it actually is!

Lets get to work …

Ok, lets split this into two parts:

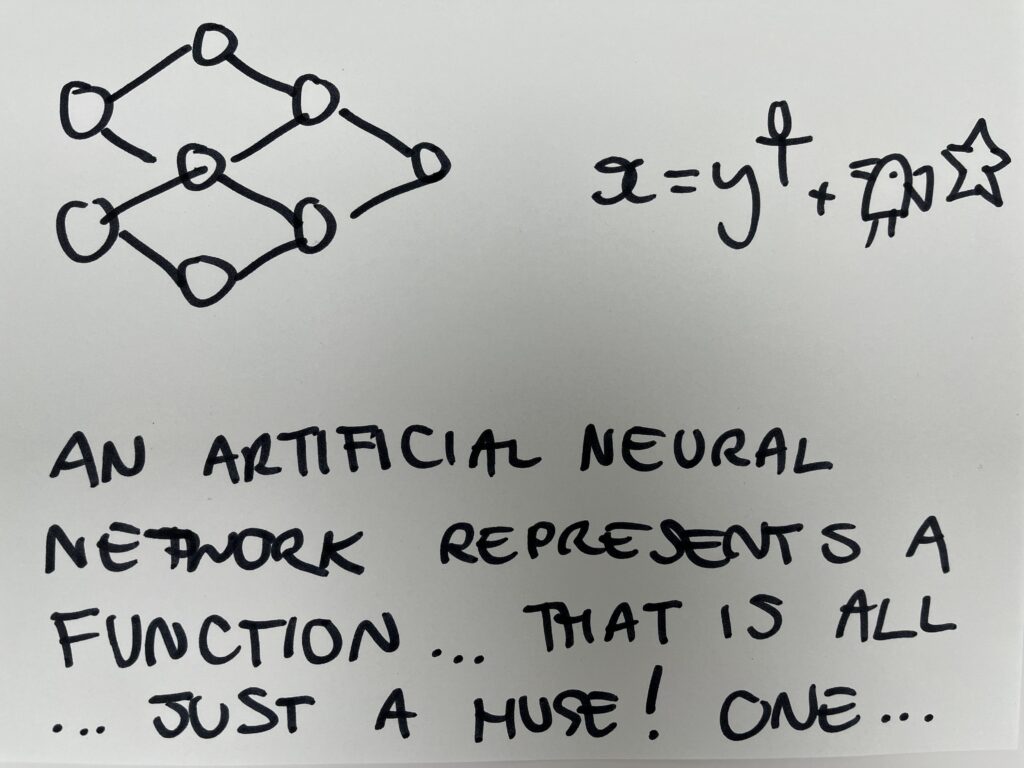

1. A Neural Network is just a function, and Machine Learning is just Function Optimisation

2. Chat GPT, is just a Neural Network trained to play a game, that I now call Whole Internet Wordle (WIW) , and that is all. – (Thanks to my Daughter Izzy for this insight!!!)

So, lets go …

Part one: A Neural Network is just a function, and Machine Learning is just Function Optimisation

Ok, so this is my main point in terms of intuitions. At the end of the day, Machine Learning is “just” function optimisation. There is a nice article on Medium (Search up) “How To Define A Neural Network as A Mathematical Function” by Angela Shi … which is about how to visualise various Artificial Neural Networks as their corresponding functions, and she does it without reverting to Hieroglyphics!

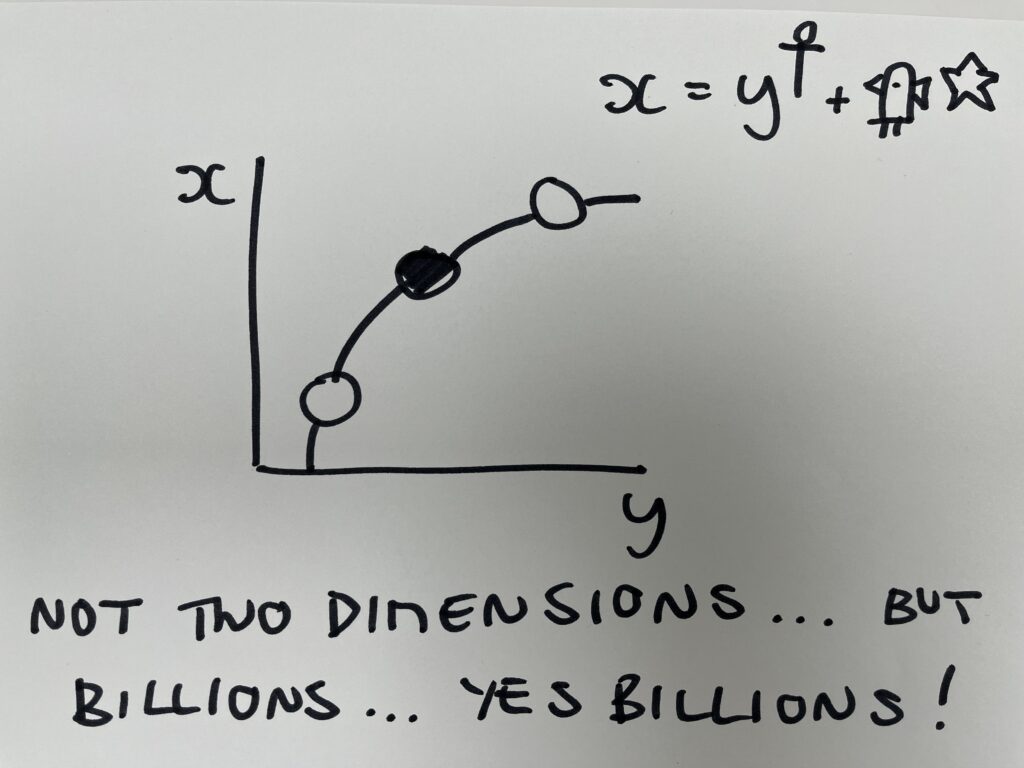

So, you can think or an Artificial Neural Network, such as ChatGPT, as representing the line on a plotted graph (the line is the function), except having two axis, or dimensions, it has hundreds, no millions, no NO, BILLIONS (sorry for shouting! 😉

Oh, yeah, so your Artificial Neural Network gives you your answer – let’s call it X – for any point on the other axis, let’s call it Y…. Crudely speaking. Hoping to share the intuition here!

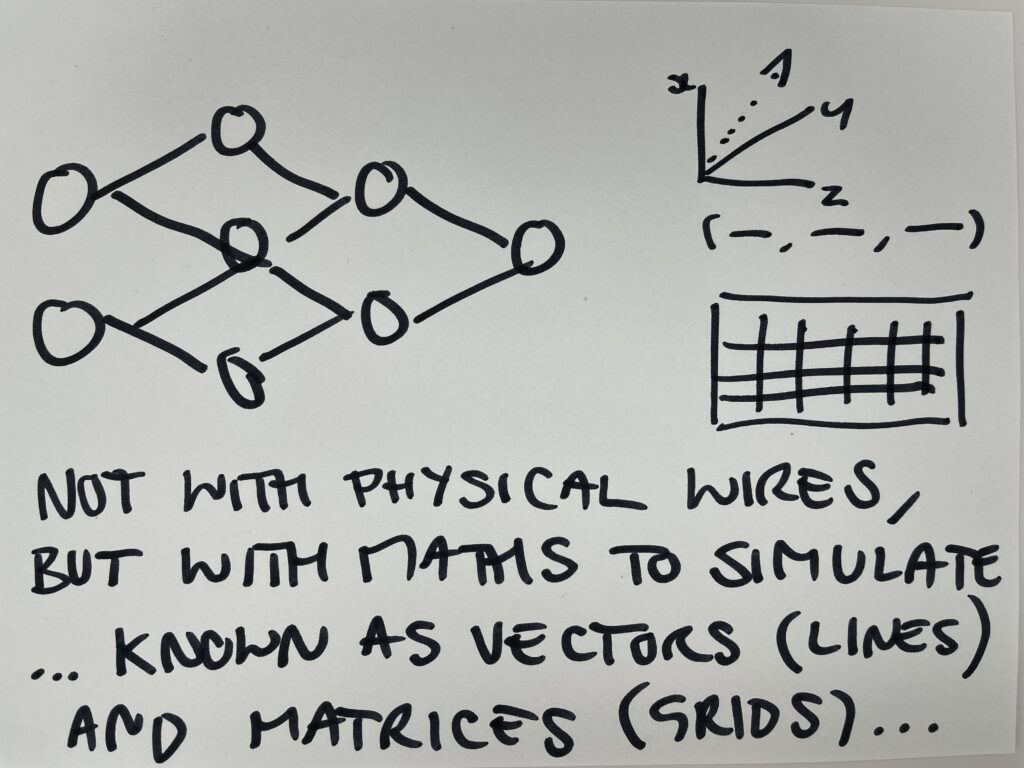

Originally, Artificial Neural Networks were wired up with physical wires and electrical charges, but it was found that they could be simulated with Maths.

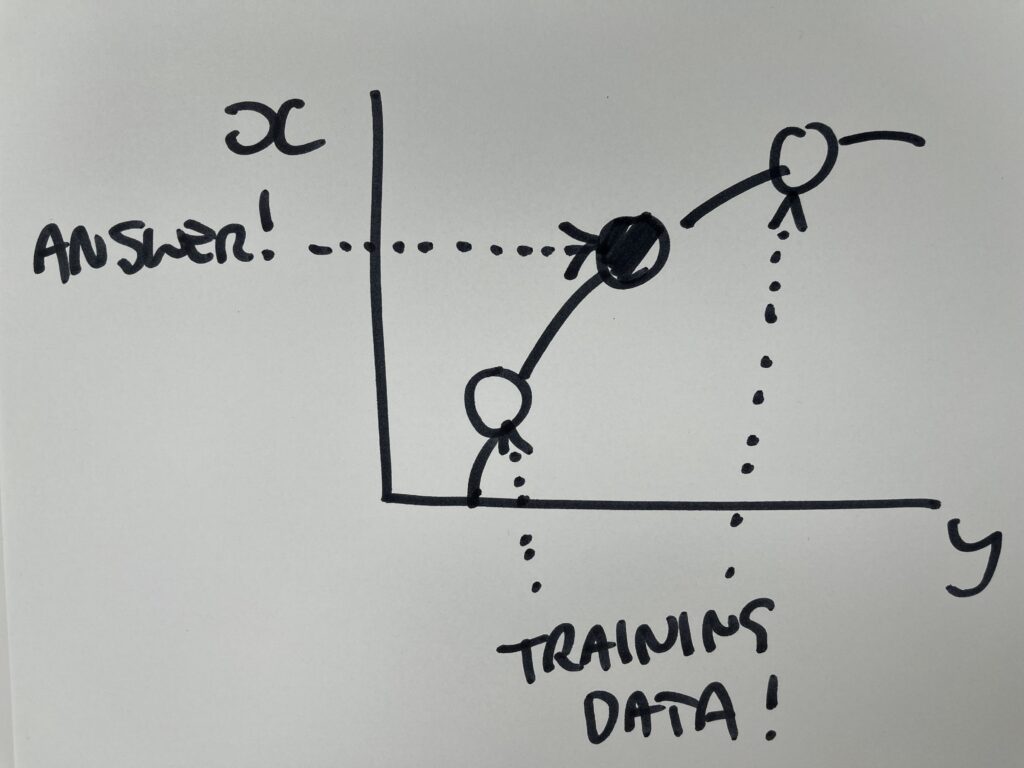

How do we train Artificial Neural Networks?

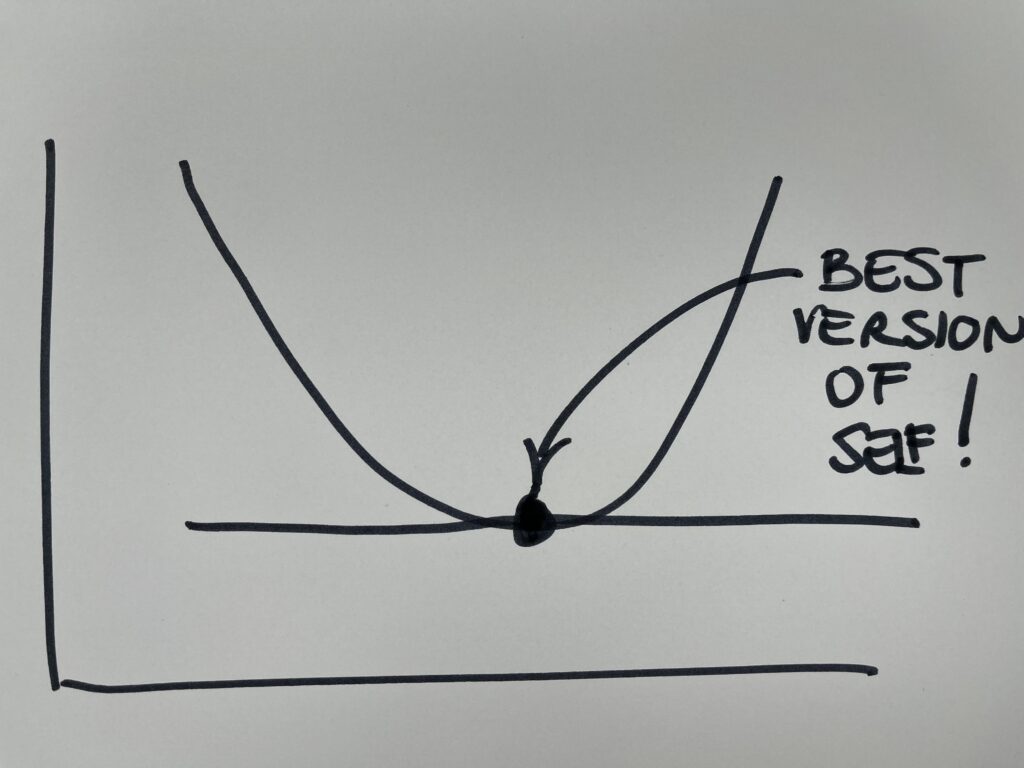

In simple terms, you make a guess at the function, see how wrong that guess was and give the amount of ‘wrongness’ a number. You plot them all on a graph. You can measure the gradient of a graph (like those signs you get on the road warning you of a steep hill), and where the gradient is minimal, you have the best guess. Your functions best version of itself (there can be lots of these like valleys in a mountain range, but lets ignore that for now).

Ready for part two, here we go …

Part two: Chat GPT, is just a Neural Network trained to play a game, that I now call Whole Internet Wordle (WIW), and that is all it is! – (Thanks to my Daughter Izzy for this insight!!!)

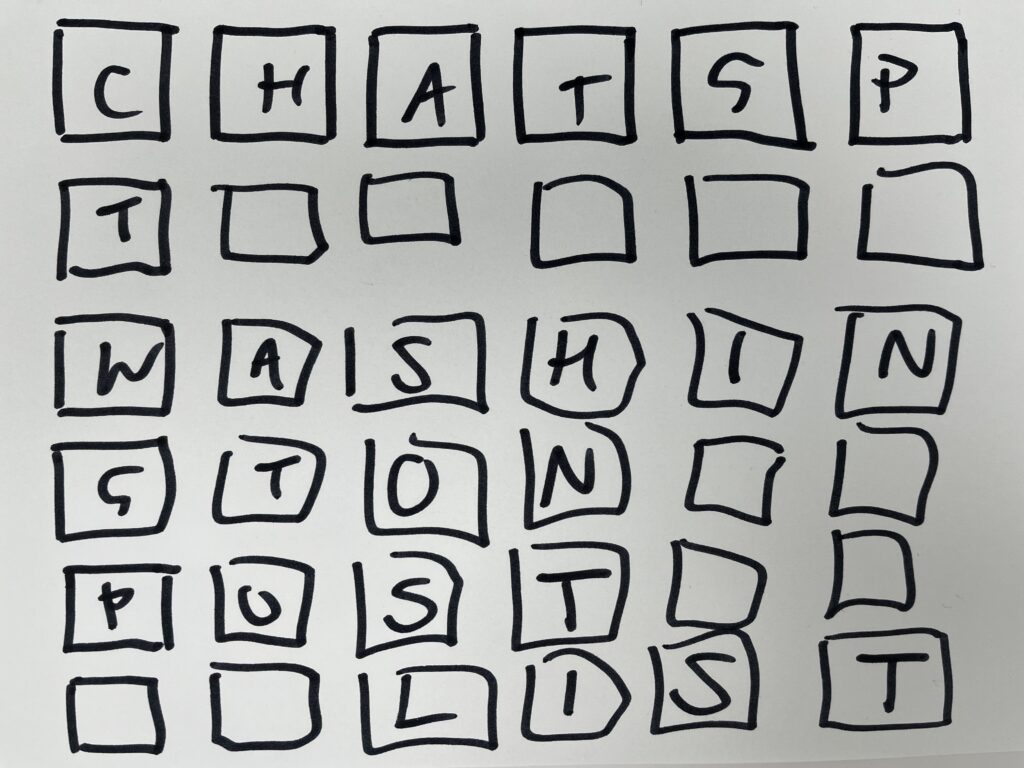

What Chat GPT has been trained on, and I oversimplify, but the game is. Take some text. Cover up the next letter (or ‘token’, you can chunk things up by letters, parts of words, words, phrases, sentences, paragraphs, chapters … but next letter is fine).

Cover up the next letter and guess what comes next. Reveal the letter and use that as a training to pass back into your Neural Network. Simple, huh? Well, yes.

Ok, do that for each of the letters in your sentence. Re-run this for every letter. Ok, now do that for every letter, of every piece of text that makes up the internet. Retraining on each letter. Blimey! If you think that this had to be done, on a bunch of real computers, in a big data centre warehouse, using electricity and paying the bills on that, then you can see why this took companies with lots of money to pay the bills. And a big carbon footprint (more on that another time).

Side note: Each time you play on ChatGPT it has to do billions and billions of calculations, and uses loads of energy, and cost these companies money. Why do they offer this for free you ask? Out of the goodness of their soft hearts. Nope. You are training the network for them.

Anyhow, so, playing ‘Wordle’ is a pretty good analogy for what Chat GPT is doing. I like to call it “Whole Internet Wordle” or WIW for short.

Only, it is not the whole internet, but rather a subset. To see what exactly, you can search up: “Washington Post” and “Inside the secret list of websites that make AI like ChatGPT sound smart”.

And they do sounds smart. To quote the great #RodneyBrooks quoted in the article “Just Calm Down About GPT-4 Already” He says “What the large language models are good at is saying what an answer should sound like, which is different from what an answer should be.—Rodney Brooks, Robust.AI.

I love that, “what an answer should sound like”. Bang on – Quote #JabeOnAI 😉

Part Three (of two): A bit more detail on Neural networks: Architecture and FlavouArchitecture

Creating Neural Networks is an art. I wrote about that around five years ago, so you can search me up on that if you choose; but I will also elaborate on that again soon on #JabeOnAI. For now, I can say that there is an additional element, created via trial and error called ‘Architecture’. In simple terms, how many layers, what is connected to what, how you plumb it all together.

And there are flavours of Machine Learning too; but neither of these should undermine the intuition described above…

Flavours of Machine Learning

Side note: There are three flavours of Machine Learning:

Vanilla (otherwise known as Supervised Learning) –

o What is the number? That is called ‘regression’

o What class is that? That is called ‘classification’

Strawberry (otherwise known as Unsupervised Learning) – Separate all this data into several classes.

Pistachio (the sophisticated choice – otherwise known as Reinforcement Learning) – using feedback positive or negative – on a ‘decision’ such as left or right.

I will go into this in a separate post.

Part four (of two): Why should I care?

Why all this is important … more on that soon … as I say … pulling the strings together …

To recap, Wordle (other similar games are available) is about swapping letters out and getting feedback. LLMs train by guessing hidden text and revealing it, ranking success and feeding it back into the system (to rather over simplify) and basically does it with the “whole internet” of text, and reprocesses for each word in all that text … and in essence it is playing the game of, which word is most likely to come next, given the words in the sentence we already have … based on the function (multi-dimensional surface) created from running all of that

So, I am working my way towards a ‘folksy’ description for all this.

A trained artificial neural net, is essentially a mathematical function, you set your parameters and is gives out a response. Try a multidimensional surface, hmmm ok, maybe a sphere (with origin point in the centre)…. Any how …

So, your multi-dimensional space must have some correspondence in “the real world” as to what that target predicted value means. Is it the colour of a pixel, a sound a word, a letter … a concept in a dictionary… to which this “pattern” in the data refers to and indeed predicts.

You can also link this to search and game play algorithms for the point in the multidimensional space to represent the “best” action of some kind. Again what is the correspondence?

This relates a little bit to the correspondence between our senses, and our brains and our consciousness experience. For a great elaboration on some of these points you can read the new book by one of the professors from my old University of Sussex, Anil Seth entitled “Being You”. More on which soon.

Now if you think about chatGpT in a sense the patterns are in the closed world of text. And to what extent does that have a correspondence with either the text or the world? The question is do these patterns in text have anything to say either about the world, or our thinking or anything else. That is the deeper philosophical question being considered.

Stephen Wolfram poses this question in his new book / blog – “What is ChatGPT Doing”. But he is coming from a perspective of machine functionalism. I am waiting to receive a copy of the book; once I have that I will look to demolish his argument here in #JabeOnAI 😉